Though a variety of models guide our instructional design work, I’d argue that ADDIE functions as the basic backbone of the process. Just about every model, trend, and best practice in the field supports one of the phases of ADDIE.

So with this in mind, it seems appropriate to take a look at the articles posted to this blog over the past year and organize them according to how they jive with ADDIE.

A = Analysis (analyze the problem/opportunity and its causes)

Two of this year’s articles primarily address analysis. Rethink Refresher Training suggests that we take time to analyze the cause of performance gaps. eLearning and an Aging Workforce looks at a specific angle of audience analysis.

D = Design (design the solution, create a blueprint)

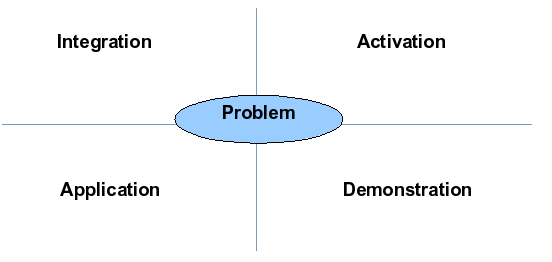

Anatomy of an eLearning Lesson: Nine Events of Instruction and Anatomy of an eLearning Lesson: Merrill’s First Principles each describe models that guide eLearning lesson design from start to finish. Three more articles focus on specific components of those models:

- 6 Techniques that Stimulate Recall in eLearning

- 7 Techniques to Capture Attention in eLearning

- Start and End eLearning Courses with Methods That Facilitate Learning

As organizations continually move toward adopting eLearning and even converting instructor-led training to eLearning, the following articles offer us guidance:

- Don’t Convert! Redesign Instructor-Led Training for eLearning

- Using eLearning in a Blended Approach

- Brainstorming for eLearning: Rules of Brainstorming

Two more articles offer design-oriented food for thought:

D = Development (develop the solution)

After organizing training content and identifying instructional activities during the design phase, we’re ready to put fingers on keyboard to write the instructional materials that learners touch. A few articles from this year addressed writing:

- Writing to Educate and Entertain: What Would Stephen King Do?

- A Formula for Storytelling in eLearning

- Rapid Development the Agile Way

Of course, it’s the programming we do with eLearning authoring tools that results in a polished, interactive learning product. Below are links to articles that offer programming tips:

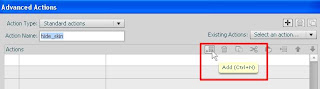

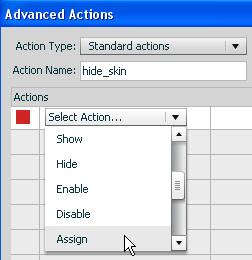

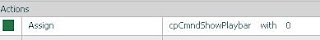

- Hiding Your Skin in Captivate 5

- How to Create Custom Buttons in Captivate

- Yes, Your Captivate Sim Can Drive Your Lectora Course

- Incorporating Your Learner’s Name into Your Lectora Course

- Unlocking the Power of Lectora Variables

I = Implementation (implement the solution)

Though several articles briefly touched on eLearning implementation and change management this year, only one addressed it as a focal point: eLearning as Part of a Change Management Effort.

E = Evaluation (measure the solution’s effectiveness)

A post-training evaluation effort allows us to answer the question – did it work? Here are links to articles that address evaluation methodologies:

- Evaluating eLearning in a Crunch

- Collecting Data from an eLearning Pilot

- Use Scenarios to Make Quiz Questions Relevant to the Job

More to come...

At a glance, we seem to address the design part of the process most frequently on this site. As we move into 2011, we’ll continue to share ideas regarding the field’s theories, models, trends, and best practices. And of course, if there are topics that you’re especially interested in seeing, we’re always open to suggestions!

Happy new year!